120.00 a

Description: When you have high GPU requirements, consider using a GPU cloud. These platforms offer services by the minute. If you are at an enterprise level, consider choosing a provider like AWS. I've heard that there are many other platforms.

#100#Infra#120#ML_Engineer_Infra#120.00#GPU#120.00 a#How_to_easily_rent_an_expensive_GPU_and_what_to_consider_when_choosing_one

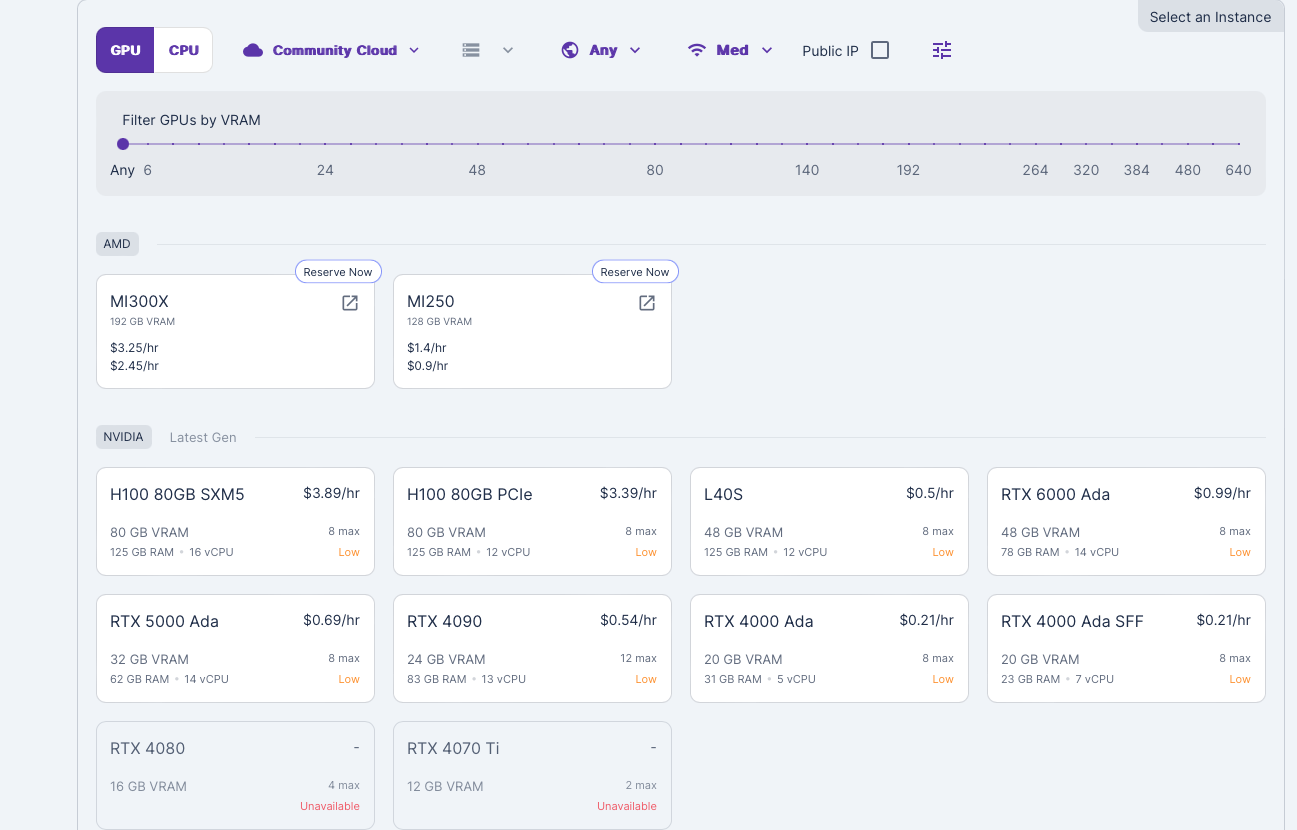

Through the GPU Cloud, I can access 20-40 GB for about 0.6 dollar per hour.

Site

I'm looking to get a GPU of about 30GB and was considering colab pro, but nowadays I'm getting top tier or A40, so I'm looking for an alternative.

So, I found out that there are sites that provide only these GPUs in the form of per-minute billing quickly and easily.

I think the price and usability are reasonable for an individual or small team that does very short and infrequent workloads.

GPU price compare Site

Why I use Runpod instead of AWS

I'm familiar with AWS, but the reason I choose a specific GPU cloud is because of the range of GPU VRAM choices.

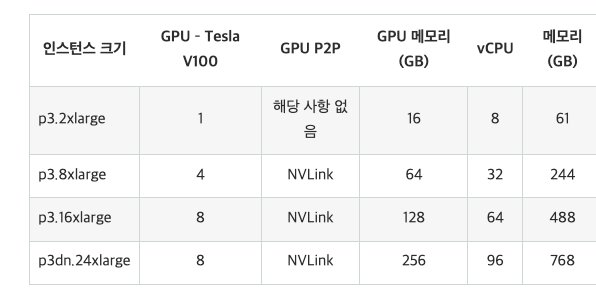

The range that's hard to get at home is actually 20-40GB of GPU VRAM, and that's hard to get in AWS. Also, with AWS you have to apply and wait to use the GPU.

Of course, the advantage is that it is good for AWS service connection and subsequent continuous level (such as using it for about a month), but I judged that it is okay to use a suitable (there are many besides Runpod) GPU cloud if it is an environment that does not consider the VARM that an individual wants to use, the usage time used in an hourly unit, and AWS.

2024 April range of choice